What is Claude Opus 4's performance on METR's task length evaluation?

15

Ṁ1139Jul 31

7%

Less than 1 hour

10%

1h to 1.5h

23%

1.5h to 2h

48%

2h to 3h

12%

At least 3h

METR's evaluation measures AI performance by the duration of tasks that models can complete with a 50% success rate. This market predicts Claude Opus 4's time horizon, as reported by METR.

If no score is provided by the end of July 2025, this market resolves as N/A. If there are multiple scores provided by METR, I'll use my best judgment. I won't trade in this market.

This question is managed and resolved by Manifold.

Get 1,000and

1,000and 3.00

3.00

Sort by:

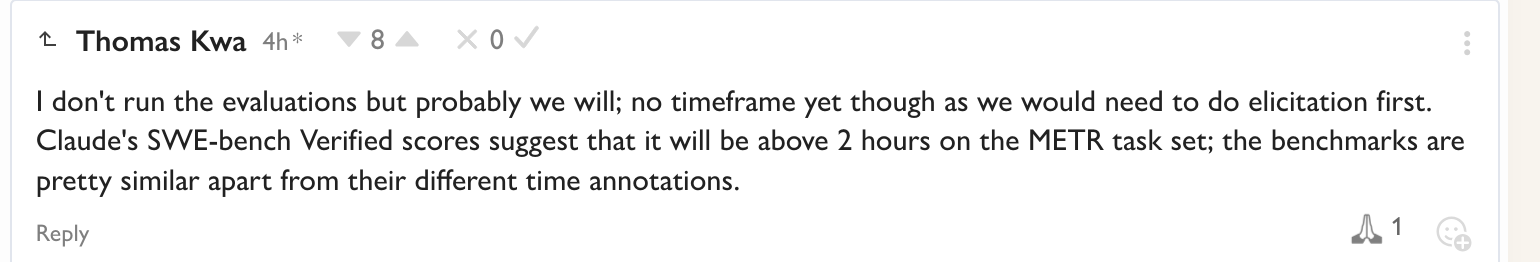

Thomas Kwa works at METR. Link to post:

https://www.lesswrong.com/posts/RnKmRusmFpw7MhPYw/cole-wyeth-s-shortform?commentId=cZWcjhHMvEwCwDWHv

@JoshYou Huh. I had thought that METR's resuls already allowed for best-of-N, which would be equivalent/redudant with parallel processing, but apparently I was wrong. I redact my earlier comment and try to do an apples-to-apples comparison.

Related questions

Related questions

How many parameters does the new possibly-SOTA large language model, Claude 3 Opus, have?

Will Claude 4 achieve over 95% on the MMLU-Pro benchmark by end of 2025?

12% chance

Will Claude 3.5 Opus beat OpenAI's best released model on the arena.lmsys.org leaderboard?

9% chance

Claude Opus 4.x Release Date

-